Search Engine Optimization (SEO) is one of the most important aspects that you should follow for making your Magento store a successful one. To get organic traffic on to your store, it must rank within the top Google search results. For that, there are many SEO practices involved and a robots.txt file is one of them. By using this file, you allow search engines to index your store, and you can also disallow pages which you don’t want to index.

Today, I will tell you how you can easily configure Magento Robots.txt file by following some easy steps. In this comprehensive guide, I will also show how to configure Robots.txt in Magento 1.x as well as for Magento 2,x:

Configure Magento Robots.txt

First and foremost, you need to understand how to create the Robots.txt file. To begin with, create a Robots.txt file in the root directory of your Magento store and then add the following declaration in your Magento Robots.txt file to enable robots.txt rules for all crawlers:

User-agent: *

Next, declare your Magento store directories which you do not want to be indexed by Google. Each declaration statement will start with ‘Disallow’:

# Directories Disallow: /404/ Disallow: /app/ Disallow: /cgi-bin/ Disallow: /downloader/ Disallow: /errors/ Disallow: /includes/ Disallow: /lib/ Disallow: /magento/ Disallow: /pkginfo/ Disallow: /report/ Disallow: /scripts/ Disallow: /shell/ Disallow: /stats/ Disallow: /var/

Now disallow specific clean URLs which you do not want to be indexed by Google. It’s a great way to avoid duplicate content issues. Also, if you want to disallow any specific page URLs of your store indexed, then you can add it here.

# Paths (clean URLs) Disallow: /catalog/product_compare/ Disallow: /catalog/category/view/ Disallow: /catalog/product/view/ Disallow: /catalog/product/gallery/ Disallow: /catalogsearch/ Disallow: /checkout/ Disallow: /control/ Disallow: /contacts/ Disallow: /customer/ Disallow: /customize/ Disallow: /newsletter/ Disallow: /poll/ Disallow: /review/ Disallow: /sendfriend/ Disallow: /tag/ Disallow: /wishlist/

The next step for configuring Magento robots.txt requires you to exclude common Magento files to be indexed which are in the root directory:

# Files Disallow: /cron.php Disallow: /cron.sh Disallow: /error_log Disallow: /install.php Disallow: /LICENSE.html Disallow: /LICENSE.txt Disallow: /LICENSE_AFL.txt Disallow: /STATUS.txt

First disallow your included and structural files by type, such as .js, .css and .php files. And then disallow page URLs, search result URLs, and pager limit URLs that are dynamically generated by Magento:

# Paths (no clean URLs) Disallow: /*.js$ Disallow: /*.css$ Disallow: /*.php$ Disallow: /*?p=*& Disallow: /*?SID= Disallow: /*?limit=all

At last, just reference your sitemap.xml by adding the following line:

Sitemap: http://www.example.com/sitemap.xml

Your final Magento Robots.txt file will be:

User-agent: * # Directories Disallow: /404/ Disallow: /app/ Disallow: /cgi-bin/ Disallow: /downloader/ Disallow: /errors/ Disallow: /includes/ Disallow: /lib/ Disallow: /magento/ Disallow: /pkginfo/ Disallow: /report/ Disallow: /scripts/ Disallow: /shell/ Disallow: /stats/ Disallow: /var/ # Paths (clean URLs) Disallow: /catalog/product_compare/ Disallow: /catalog/category/view/ Disallow: /catalog/product/view/ Disallow: /catalog/product/gallery/ Disallow: /catalogsearch/ Disallow: /checkout/ Disallow: /control/ Disallow: /contacts/ Disallow: /customer/ Disallow: /customize/ Disallow: /newsletter/ Disallow: /poll/ Disallow: /review/ Disallow: /sendfriend/ Disallow: /tag/ Disallow: /wishlist/ # Files Disallow: /cron.php Disallow: /cron.sh Disallow: /error_log Disallow: /install.php Disallow: /LICENSE.html Disallow: /LICENSE.txt Disallow: /LICENSE_AFL.txt Disallow: /STATUS.txt # Paths (no clean URLs) Disallow: /*.js$ Disallow: /*.css$ Disallow: /*.php$ Disallow: /*?p=*& Disallow: /*?SID= Disallow: /*?limit=all Sitemap: http://www.example.com/sitemap.xml

The process to configure Magento robots.txt is now complete. You can now move onto the next phase.

Configure Magento 2 Robots.txt

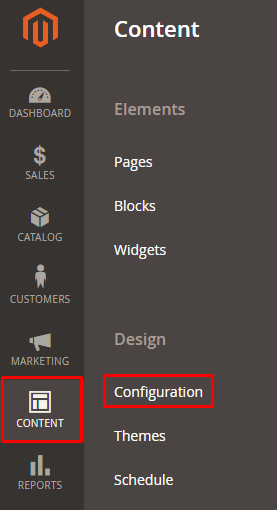

In order to configure Magento 2 robots.txt, you have to first navigate to CONTENT → Configuration from the admin panel of your Magento 2 store:

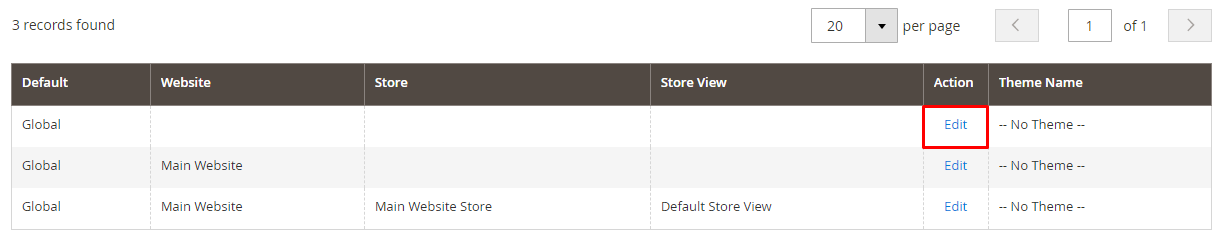

Now click on Edit:

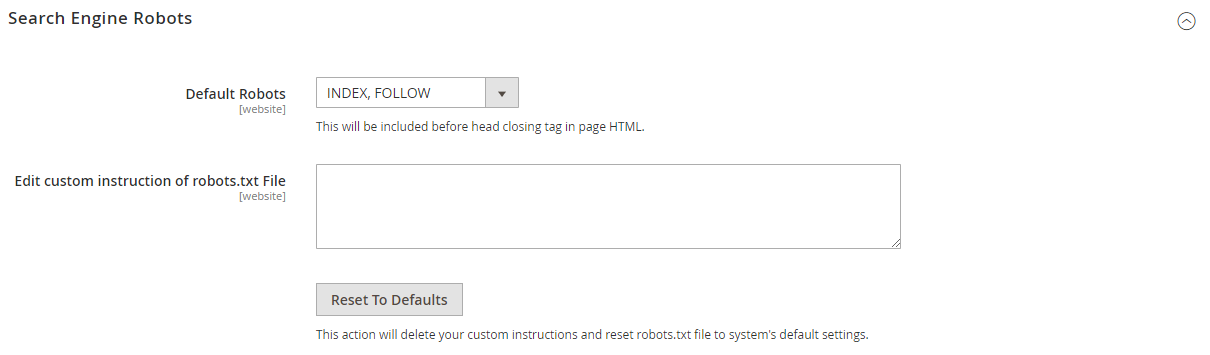

Then scroll the page and you will see Search Engine Robots section, unfold it:

- Default Robots fields have four options, choose one according to your need:

INDEX, FOLLOW: If you want web crawlers to index your store and also check back later for changes.

NOINDEX, FOLLOW: If you don’t want web crawlers to index Magento 2 store but want it to check back later for changes.

INDEX, NOFOLLOW: If you want web crawlers to index your store once, but don’t want it to check back later for changes.

NOINDEX, NOFOLLOW: If you don’t want web crawlers to index your store and also don’t want it to check back later for changes.

- Edit custom Instruction of Robots.txt file: This file is used to add custom instructions. I will discuss it later in this tutorial.

- Reset To Defaults button: Clicking it will remove your custom instructions and restore the default instructions.

Now just click on Save Configuration to configure Magento 2 Robots.txt.

Custom Instructions for Magento 2 Robots.txt

For Allowing Full Access

User-agent:* Disallow:

For Disallowing Access to All Folders

User-agent:* Disallow: /

Default Instructions

Disallow: /lib/ Disallow: /*.php$ Disallow: /pkginfo/ Disallow: /report/ Disallow: /var/ Disallow: /catalog/ Disallow: /customer/ Disallow: /sendfriend/ Disallow: /review/ Disallow: /*SID=

To Disallow Duplicate Content:

Disallow: /tag/ Disallow: /review

To Disallow User Account and Checkout Pages

Disallow: /checkout/ Disallow: /onestepcheckout/ Disallow: /customer/ Disallow: /customer/account/ Disallow: /customer/account/login/

To Disallow CMS Directories:

Disallow: /app/ Disallow: /bin/ Disallow: /dev/ Disallow: /lib/ Disallow: /phpserver/ Disallow: /pub/

To Disallow Catalog and Search Pages

Disallow: /catalogsearch/ Disallow: /catalog/product_compare/ Disallow: /catalog/category/view/ Disallow: /catalog/product/view/

To Disallow URL Filter Searches

Disallow: /*?dir* Disallow: /*?dir=desc Disallow: /*?dir=asc Disallow: /*?limit=all Disallow: /*?mode*

The process to configure Robots.txt in Magento 2.x is now complete.

Final Words

If you are trying to configure robots.txt file for the very first time, don’t worry. It is easier than it sounds. This is done to help improve the SEO of your Magento store, which will result in boosting sales and conversions.

Read: 27 SEO Professionals Share Their Ecommerce SEO Tips

I hope that after following this tutorial, you have learned how to easily configure Magento Robots.txt and Magento 2 Robots.txt. Still, if you have any confusion or want to discuss anything related to this guide, just use the comment box below!